Unconstrained Optimization

Unconstrained optimization problems involve finding the optimal solution that minimizes or maximizes an objective function without any constraints. Unconstrained optimization is a fundamental problem in many fields, including machine learning, statistics, and operations research.

Types of Unconstrained Optimization:

- Local Optimization: Finds the optimal solution in a neighborhood of an initial point.

- Global Optimization: Finds the globally optimal solution, which may require exploring the entire search space.

Mathematical Formulation

Optimization of an objective function without any constraints on the decision variables.

Minimize or Maximize: f(x)

Where:

- f(x) is the objective function.

- x is the vector of decision variables.

Methods for Unconstrained Optimization

- Gradient Descent: For smooth functions, follows the direction of steepest descent (or ascent for maximization).

- Newton’s Method: Uses second-order derivatives (Hessian matrix) to find optimal points.

- Conjugate Gradient Method: Suitable for large-scale problems.

- Simplex Search: For non-differentiable functions.

Applications

- Machine learning (e.g., training models via loss minimization).

- Curve fitting and regression.

- Signal processing.

Challenges

- Sensitive to initial guesses.

- Risk of getting stuck in local optima (especially for non-convex functions).

Constrained Optimization

Constrained optimization problems involve finding the optimal solution that satisfies a set of constraints, in addition to minimizing or maximizing an objective function. The constraints can be equality constraints, inequality constraints, or a combination of both. Constrained optimization problems are common in many fields, such as engineering, economics, and operations research.

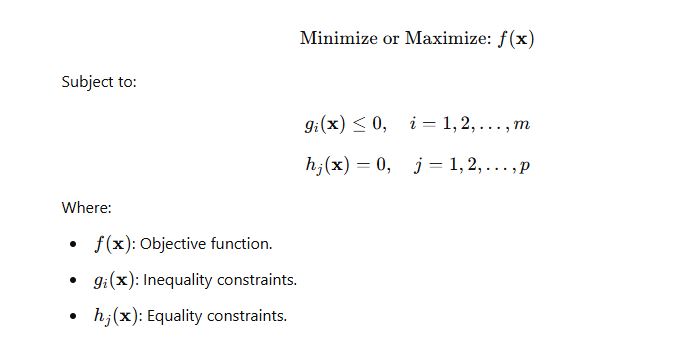

Mathematical Formulation

Optimization of an objective function subject to one or more constraints on the decision variables.

Methods for Constrained Optimization

- Lagrangian Formulation: Introduces a Lagrange multiplier to convert the constrained problem into an unconstrained problem.

- Karush-Kuhn-Tucker (KKT) Conditions: Generalizes Lagrange multipliers to inequality-constrained problems.

- Penalty Methods: Converts constrained problems into unconstrained ones by adding a penalty term for violating constraints.

- Interior Point Methods: Iteratively approaches the feasible region’s boundary from the interior.

- Sequential Quadratic Programming (SQP): Solves a sequence of quadratic approximations to the problem.

Applications

- Portfolio optimization (maximize returns under risk constraints).

- Resource allocation (e.g., budget or capacity limitations).

- Engineering design (e.g., material strength, size restrictions).

- Production planning.

Challenges

- Higher computational complexity than unconstrained optimization.

- Constraints may create non-convex or disjoint feasible regions.

Key Difference

| Basis | Unconstrained Optimization | Constrained Optimization |

|---|---|---|

| Constraints | No restrictions on decision variables | Subject to equality/inequality constraints |

| Complexity | Simpler, fewer computational resources | More complex, requires advanced techniques |

| Methods | Gradient-based or direct search methods | Lagrange multipliers, KKT, interior point |

| Applications | Broad problems without external limits | Practical problems with real-world constraints |

Which to Use?

- Use unconstrained optimization when no restrictions exist on the variables.

- Use constrained optimization when the problem involves real-world restrictions, such as budgets, resource limits, or regulatory requirements.