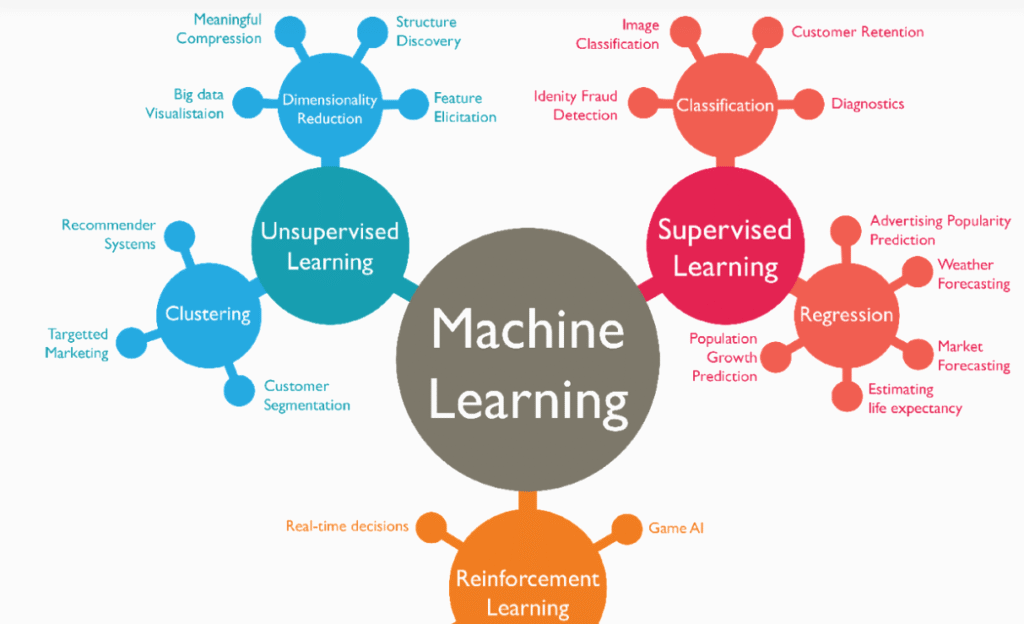

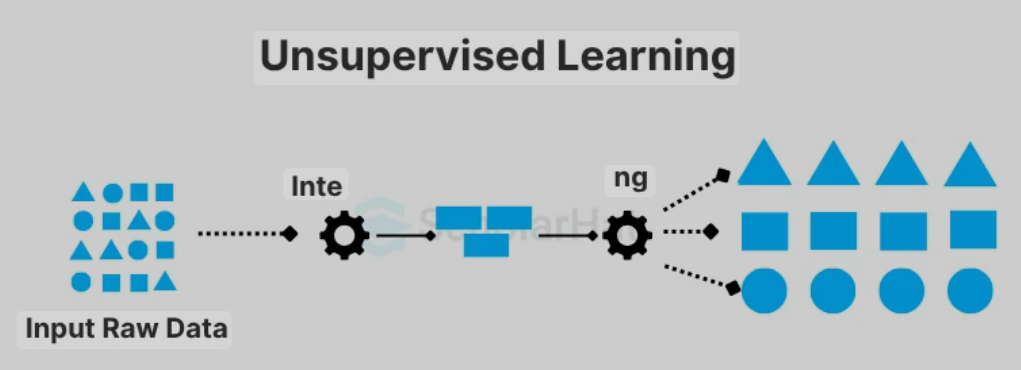

An Unsupervised Learning Algorithm (ULA) is a type of machine learning method that deals with data that has no predefined labels or target outputs. Unlike supervised learning, where the model is trained using examples of input-output pairs, unsupervised learning explores the structure and relationships within data without explicit guidance. The main goal is to discover hidden patterns, groupings, or features that naturally exist in the dataset.

Unsupervised learning operates by analyzing the inherent properties of data to reveal meaningful insights. It identifies similarities, differences, and relationships between variables, allowing the algorithm to form clusters, reduce dimensionality, or detect anomalies. This makes it particularly useful in situations where labeled data is scarce, expensive, or impossible to obtain. The algorithm essentially “learns by itself,” uncovering structure directly from the input data.

There are several categories of unsupervised learning, with clustering and dimensionality reduction being the most common. Clustering algorithms, such as K-Means or DBSCAN, group data points that share similar features, while dimensionality reduction techniques like PCA simplify complex datasets by retaining only their most important components. These methods help make large and unorganized datasets easier to understand and visualize.

Unsupervised learning plays a vital role in data exploration and preprocessing, helping to prepare datasets for further supervised learning tasks. For example, it can identify groups of customers with similar behaviors, reduce redundant information in large data collections, or find hidden trends in market data. This makes it an essential first step in many data science workflows, even when the final goal is prediction or classification.

Despite its strengths, unsupervised learning also presents challenges. Since there are no labeled outcomes, evaluating the performance of an unsupervised model can be difficult. The discovered patterns may not always have clear real-world meanings, and results often depend heavily on how data is represented and preprocessed. However, when applied correctly, these algorithms can reveal powerful insights that would otherwise remain hidden.

Types of Unsupervised Learning

K-Means Clustering

K-Means is one of the most widely used clustering algorithms. It partitions data into k clusters by minimizing the distance between each data point and the cluster centroid. The centroids are updated iteratively until stability is achieved.

This algorithm is simple, efficient, and effective for large datasets. It’s used in customer segmentation, image compression, and anomaly detection, where identifying natural groupings within data is essential.

Hierarchical Clustering

Hierarchical clustering builds a tree-like structure of clusters, either through agglomerative (bottom-up) or divisive (top-down) approaches. It doesn’t require specifying the number of clusters beforehand.

The resulting dendrogram provides a visual representation of data relationships, allowing for flexible interpretation. It’s particularly useful in genetics, social network analysis, and document organization.

DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

DBSCAN groups together closely packed points and identifies points that lie alone as outliers. It’s based on the density of data points rather than predefined cluster numbers.

This makes DBSCAN ideal for discovering clusters of arbitrary shapes and handling noisy datasets. It’s widely used in spatial data analysis, fraud detection, and anomaly identification.

Gaussian Mixture Models (GMM)

GMM assumes that data points are generated from a mixture of several Gaussian distributions with unknown parameters. It uses probabilistic models to assign data points to clusters based on likelihood rather than distance.

This algorithm is flexible and can model more complex cluster shapes than K-Means. It’s used in speech recognition, image segmentation, and financial modeling where uncertainty and overlapping groups exist.

Principal Component Analysis (PCA)

PCA is a dimensionality reduction technique that transforms high-dimensional data into a smaller set of uncorrelated components called principal components. It identifies directions of maximum variance in the data.

PCA is valuable for simplifying datasets, improving visualization, and enhancing performance in machine learning models. It’s widely used in image compression, genomics, and pattern recognition.

Independent Component Analysis (ICA)

ICA separates a multivariate signal into additive, independent components. Unlike PCA, which focuses on maximizing variance, ICA focuses on maximizing statistical independence among components.

It’s commonly used in signal processing, such as separating mixed audio signals or removing noise from EEG and MRI data. ICA is powerful when the goal is to uncover hidden underlying factors.

t-SNE (t-Distributed Stochastic Neighbor Embedding)

t-SNE is a nonlinear dimensionality reduction algorithm designed for visualizing high-dimensional data in two or three dimensions. It preserves the local structure of data, making clusters more apparent in visual form.

It’s especially effective in understanding complex datasets like images or genetic data. t-SNE is widely used for data exploration and visualization rather than direct prediction tasks.

Autoencoders

Autoencoders are a type of neural network designed to learn efficient representations of data. They compress input data into a lower-dimensional form and then reconstruct it, minimizing information loss.

These models are used for dimensionality reduction, denoising, and anomaly detection. Autoencoders are particularly valuable in applications involving large-scale unstructured data such as images and text.

Apriori Algorithm

The Apriori algorithm is used for discovering frequent itemsets and association rules in transactional datasets. It identifies relationships between variables in large databases based on their co-occurrence patterns.

It’s commonly used in market basket analysis, recommendation systems, and retail analytics. Apriori helps businesses understand customer buying habits and create cross-selling strategies.

Self-Organizing Maps (SOM)

Self-Organizing Maps are neural network-based algorithms that reduce high-dimensional data into a two-dimensional representation. They preserve the topological relationships between data points, meaning similar inputs are mapped close together.

SOMs are particularly useful for data visualization, clustering, and pattern recognition. They are widely applied in fields such as market analysis, image classification, and bioinformatics to uncover hidden structures in complex datasets.

Fuzzy C-Means Clustering

Fuzzy C-Means is an extension of K-Means where each data point can belong to multiple clusters with varying degrees of membership. It uses fuzzy logic to assign probabilities rather than strict cluster assignments.

This flexibility allows for smoother transitions between clusters and better handling of overlapping data. It’s commonly used in medical imaging, pattern recognition, and decision-making systems that deal with uncertainty.

Restricted Boltzmann Machines (RBM)

Restricted Boltzmann Machines are stochastic neural networks that learn a probability distribution over their input data. They consist of visible and hidden layers that work together to capture complex dependencies between variables.

RBMs are often used as building blocks for deep learning models and can perform feature extraction, collaborative filtering, and dimensionality reduction. Their ability to learn unsupervised representations makes them powerful for understanding hidden patterns.

Variational Autoencoders (VAE)

Variational Autoencoders are advanced generative models that learn to represent data in a continuous latent space. They are trained to encode inputs into distributions rather than single points, allowing for smooth interpolation between data samples.

VAEs are used for data generation, anomaly detection, and image synthesis. They are a cornerstone of modern unsupervised learning, enabling systems to understand and recreate complex data structures.

Deep Belief Networks (DBN)

Deep Belief Networks are composed of multiple layers of Restricted Boltzmann Machines, where each layer learns to represent the input features of the previous one. This hierarchical structure captures increasingly abstract representations of data.

DBNs are effective in unsupervised pre-training for deep learning models. They have been used in speech recognition, image classification, and dimensionality reduction, especially before the rise of fully supervised deep networks.

Generative Adversarial Networks (GANs)

Generative Adversarial Networks consist of two neural networks — a generator and a discriminator — that compete against each other. The generator creates synthetic data, while the discriminator evaluates its authenticity against real data.

GANs are revolutionary in generating realistic images, videos, and audio. They are also used in data augmentation, art creation, and scientific simulations, pushing the boundaries of what unsupervised learning can achieve.

Kernel PCA (Principal Component Analysis with Kernels)

Kernel PCA is an extension of traditional PCA that uses kernel functions to capture nonlinear relationships in data. It maps the original data into a higher-dimensional space before performing dimensionality reduction.

This allows for more flexibility and accuracy when dealing with complex datasets. Kernel PCA is used in image recognition, bioinformatics, and denoising applications where linear models fall short.

Independent Subspace Analysis (ISA)

Independent Subspace Analysis extends Independent Component Analysis by grouping related features into subspaces rather than treating them as isolated components. It’s designed to handle more complex, structured data.

ISA is valuable for feature extraction and unsupervised representation learning, particularly in image and audio signal processing. It allows models to uncover both independent and correlated hidden factors within data.

Non-Negative Matrix Factorization (NMF)

Non-Negative Matrix Factorization decomposes data into two non-negative matrices, emphasizing additive combinations of features rather than subtractive ones. This leads to more interpretable results where parts contribute positively to the whole.

NMF is widely used in topic modeling, image analysis, and music signal processing. Its ability to produce meaningful, human-understandable features makes it a preferred choice for exploratory data analysis.

Spectral Clustering

Spectral Clustering is a graph-based algorithm that uses the eigenvalues of a similarity matrix to reduce dimensions before clustering. It transforms data into a space where clusters are more easily separable using standard techniques like K-Means.

This approach excels in identifying clusters of complex, non-convex shapes. It’s often used in image segmentation, social network analysis, and bioinformatics where relationships between points are better represented as graphs rather than distances.

Manifold Learning

Manifold Learning focuses on discovering the low-dimensional structures hidden within high-dimensional data. It assumes that data points lie on a smooth, nonlinear surface — or manifold — embedded in a higher-dimensional space.

Algorithms like Isomap and Locally Linear Embedding (LLE) are examples of manifold learning methods. They are particularly useful in visualization, pattern recognition, and feature extraction from complex datasets such as images and speech.

Affinity Propagation

Affinity Propagation identifies clusters by exchanging messages between data points until an optimal set of “exemplar” points emerges. Unlike K-Means, it doesn’t require specifying the number of clusters in advance.

This algorithm efficiently handles large datasets and can discover clusters of varying sizes. It’s applied in image analysis, document clustering, and recommendation systems, where natural groupings need to be uncovered dynamically.

Mean Shift Clustering

Mean Shift Clustering is a non-parametric algorithm that doesn’t assume any specific number of clusters. It works by iteratively shifting data points toward the mode (densest region) of the data distribution.

This makes it ideal for discovering clusters of arbitrary shapes and densities. Mean Shift is widely used in computer vision, object tracking, and image segmentation, where data forms irregular and naturally distinct regions.

Graph-Based Clustering

Graph-Based Clustering represents data as a network of nodes and edges, where the connections capture similarity between points. Clustering is achieved by identifying densely connected subgraphs within this network.

It’s particularly powerful for data with inherent relationships, such as social networks or molecular structures. This approach is used in community detection, recommendation systems, and fraud analysis, where relational patterns matter more than distances.

Isolation Forest

Isolation Forest is an anomaly detection algorithm based on the principle that anomalies are easier to isolate than normal points. It randomly partitions data and measures how many splits are required to isolate a given instance.

This technique is efficient, scalable, and effective for detecting outliers in large datasets. It’s frequently applied in cybersecurity, fraud detection, and system monitoring, making it a valuable tool for unsupervised anomaly identification.